Capturing Emotion Using Software

Hanging in a bilingual country has taught me a lot language and communication -- especially intra-species communication. For example, on my walks and hikes, I often run into dogs that do not understand English commands. They were trained totally in the French language. They have run ahead of their masters on the trail and run into me. Of course, I don't know that they are members of French speaking family, but it really doesn't matter. Dogs are good at getting the gist and meaning of commands from humans. I can get a well-behaved dog to sit and wait for its master, even though it doesn't understand English.

How does a dog do this? A dog can gain a lot of clues from the way the commands sound. If I firmly tell the dog to "Sit" in a sharp voice, it usually does. A dog can judge the type of command by the timbre of the voice. A dog figures out the command by the qualities of the sound of the spoken word.

Can a computer be trained to do the same? I realized of course, that if we are ever to get a true pass on a Turing test where a person doesn't know if they are talking to a computer or human, we have to have software that emotes. To have emotion, one must be able of recognizing emotion. To get an easy algorithm, I went to the example of the French speaking dogs.

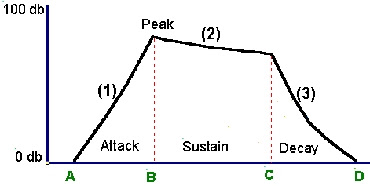

When I analyze what is going on, I realize that what dogs respond to is the various components of the sound of a command. The primary components of the sound wave are attack, sustain and decay. A command to sit or stay has a fast attack, a short sustain and a fast decay. A soothing sound has a slow attack, a long sustain and a long decay.

Attack is how fast or the rate that the sound reaches its peak amplitude. Sustain is the body of how long the sound lasts after it reaches peak amplitude, and decay is how it fades away. A sound that scares you has a fast attack. It also grabs your attention.

So the algorithm for a software program to determine emotion, is to break the sound wave down into Attack, Sustain and Decay. The real neat part about doing it this way, is that it is language independent. Whether you say "Cara mia, ti voglio bene", or "Ma Belle, je t'aime" or "My darling, I love you", there will be no fast attacks and short decays.

Individual words that convey emotion usually demonstrate Onomatopoeia, or sounding like the source of the sound. For example the word hiccup sounds like a hiccup, and a smooth sibilant stream is peaceful. Hence by rating each word for ASD or Attack/Sustain/Decay, one can rate a sentence for the emotion it has. One can do it mathematically or let a series of artificial neural nets be trained to recognize various ASD patterns. The bottom line is that while one cannot get the full range of emotion from just the components of the sound, one can use that as a good starting point as to what emotions are being expressed.

So there you have it space cadets -- a methodology for software that emotes. Source code to follow. Status of project for serious application -- requires funding.

No comments:

Post a Comment